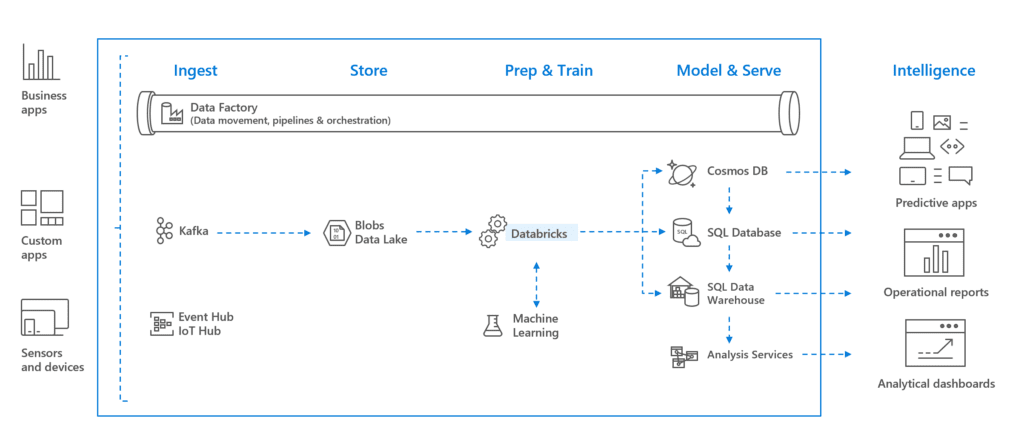

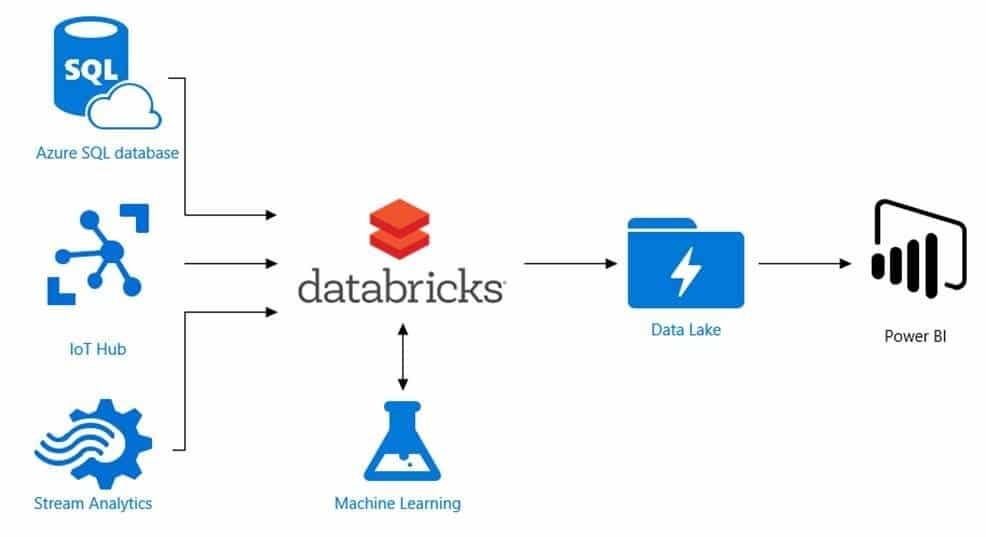

Azure Databricks is a powerful data analytics platform for Microsoft Azure cloud services. It combines the scalability and flexibility of Azure with the collaborative and interactive features of Databricks to provide a comprehensive solution for data analytics, machine learning, and big data processing.

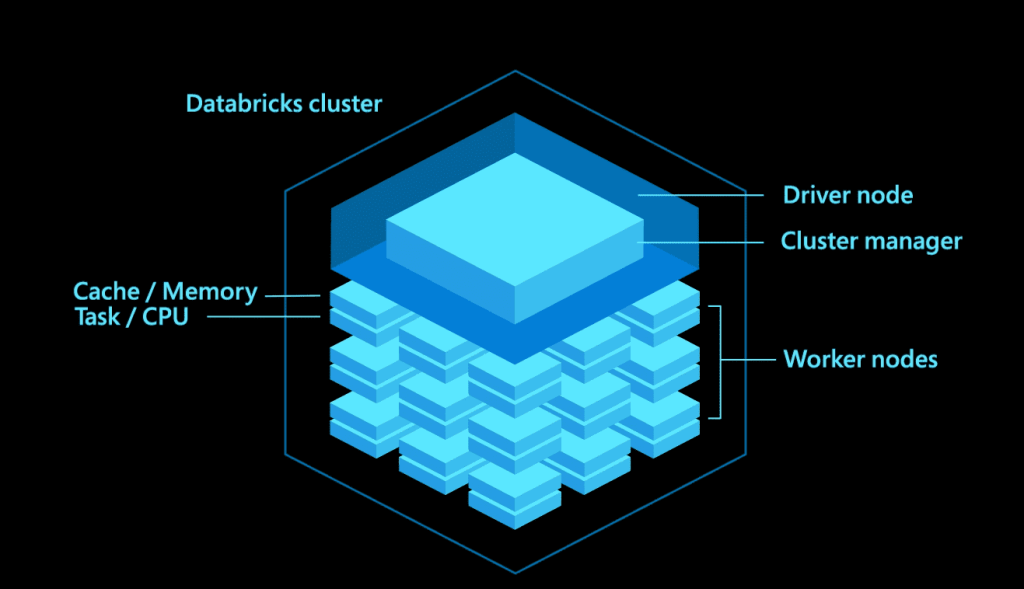

Built on Apache Spark, Azure Databricks simplifies the process of building and managing big data analytics and artificial intelligence solutions. It offers a unified workspace where data engineers, data scientists, and analysts can collaborate seamlessly, allowing them to process and analyze massive datasets efficiently.

With Azure Databricks, users can leverage advanced analytics capabilities, such as interactive querying, machine learning, and graph processing, to derive meaningful insights from their data. The platform supports Numerous programming languages, including Python, Scala, R, and SQL, enabling users to work with their preferred language and tools.

Understanding Azure Databricks Pricing Model

Azure Databricks is widely used for analytics due to its powerful capabilities and seamless integration with the Azure ecosystem.

It provides a scalable and collaborative environment for processing and analyzing large volumes of data, making it an excellent option for businesses wishing to derive valuable insights from their data.

Using the flexible pricing structure Azure Databricks provides, customers may only pay for the resources they utilize. Here is an overview of the pricing model in a tabular format:

| Pricing Aspect | Description |

| Databricks Unit (DBU) | Azure Databricks pricing is based on a unit called Databricks Unit (DBU). DBU represents the processing power consumed by various Azure Databricks workloads, including data processing, machine learning, and data engineering tasks. Different VM instance types have different DBU per hour rates. |

| Virtual Machines (VMs) | Azure Databricks runs on virtual machines (VMs), and the pricing depends on the VM instance type and the number of instances used. VMs are provisioned based on the workload requirements and may be adjusted in size as necessary. The pricing is based on the accumulated DBU hours of all VMs. |

| Storage | Azure Databricks allows users to store and access data from various Azure storage services like Azure Blob Storage and Azure Data Lake Storage. The pricing for storage is separate and depends on the specific storage service used and the amount of data stored. |

| Data Transfer | Data transfer between Azure Databricks and other Azure services within the same region is typically free. However, there may be charges for data transfer between different Azure regions or for data transfer out of Azure Databricks. The pricing for data transfer varies based on the amount of data transferred. |

It’s important to note that Azure Databricks pricing can be intricate and dependent on several things, such as VM instance types, the number of instances, data storage, and data transfer. To receive a precise cost estimate, it’s recommended to use the Azure Pricing Calculator or consult the official Azure Databricks pricing documentation for detailed information.

Various purchase models under the Azure Databricks Pricing Structure:

The various pricing models within the Azure Databricks price structure can be categorized in the following tabular format:

Pay-as-you-go model:

| Workload | DBU application ratio — Standard tier | DBU application ratio — Premium tier |

| All Purpose Compute | 0.4 | 0.55 |

| Jobs Compute | 0.15 | 0.30 |

| Jobs Light Compute | 0.07 | 0.22 |

| SQL Compute | NA | 0.22 |

| Delta Live Tables | NA | 0.30 (core), 0.38 (pro), 0.54 (advanced) |

Databricks Unit pre-purchase plans:

The users of Azure Databricks can achieve up to 37% savings when they choose to pre-purchase in the form of Databricks Commit Units, also known as the DBCU. The period of these purchases is categorized into two parts: 1-year pre-purchase plan and 3-year pre-purchase plan. The DBCU is designed to generalize the use of Azure Databricks workloads in the tiers into a single purchase bill.

1-year pre-purchase plan:

The 1-year pre-purchase plan can be described in the following table format:

| Databricks commit unit (DBCU) | Price (with discount) | Discount |

| 25,000 | $23,500 | 6% |

| 50,000 | $46,000 | 8% |

| 1,00,000 | $89,000 | 11% |

| 2,00,000 | $1,72,000 | 14% |

| 3,50,000 | $2,87,000 | 18% |

| 5,00,000 | $4,00,000 | 20% |

| 7,50,000 | $5,77,500 | 23% |

| 10,00,000 | $7,30,000 | 27% |

| 15,00,000 | $10,50,000 | 30% |

| 20,00,000 | $13,40,000 | 33% |

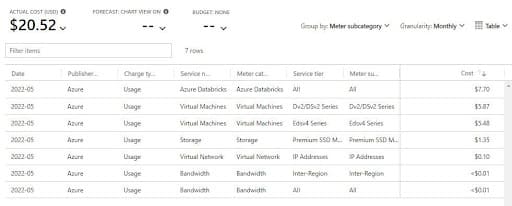

Azure Databricks Granular Cost Breakdown with Custom Tags and Default Tags

Azure Databricks provides granular cost breakdown capabilities through the use of custom tags and default tags. Custom tags allow you to assign specific attributes or labels to your Databricks resources, while default tags provide predefined attributes based on the Azure resource type.

Below is a table format illustrating the cost breakdown with custom tags and default tags for Azure Databricks resources:

| Resource | Custom Tags | Default Tags | Cost |

| Databricks | Environment: Production | Environment: Default | $500 |

| Workspace | Department: Finance, Project: X | Department: IT | $200 |

| Cluster | Environment: Development, Owner: A | Environment: Default | $300 |

| Notebook | Department: Marketing | Department: Default | $50 |

| Job | Owner: B | Department: Default | $100 |

The “Custom Tags” column in the above table represents the custom attributes assigned to each resource. For example, the Databricks resource has a custom tag “Environment” with a value of “Production.” The “Default Tags” column represents the predefined attributes provided by Azure based on the resource type. These attributes are automatically assigned and can be used for cost allocation and management.

The cost associated with each resource is mentioned in the “Cost” column. By leveraging custom and default tags, you can gain insights into the cost distribution across different attributes and identify cost drivers within your Databricks environment.

Utilizing unique tags enables you to further categorize your resources based on your organizational needs. In this case, you might utilize unique tags to track resources by department, project, environment, or any other relevant attribute. This enables you to perform more detailed cost analysis and chargeback processes within your organization.

Default tags, on the other hand, provide a baseline set of attributes that are automatically assigned to Azure resources. These attributes, such as “Department” or “Environment,” are shared across different resource types and provide a consistent way to categorize and manage resources within your Azure subscription.

Combining custom and default tags allows you to create a comprehensive cost breakdown that aligns with your organization’s resource management and cost allocation requirements. This allows you to optimize spending, track expenses, and gain better visibility into the cost distribution within your Azure Databricks environment.

Features and Benefits of the Databricks Free Trial

The Databricks Free Trial offers various features and benefits that enable users to explore and experience the capabilities of the Databricks platform. This trial period allows users to leverage the power of Databricks for a limited time without any upfront cost. Let’s delve into the features and benefits of the Databricks Free Trial:

- Scalable Data Processing: Databricks provides a scalable framework for distributed data processing that enables you to process large datasets and perform complex analytics tasks efficiently.

- Unified Analytics: With Databricks, you can combine data engineering, data science, and business analytics on a single collaborative platform. This integration enables seamless collaboration and faster insights generation.

- Apache Spark Integration: Databricks is built on Apache Spark, a powerful open-source data processing framework. It provides easy integration with Spark, allowing you to leverage Spark’s data processing, machine learning, and streaming analytics capabilities.

- Automated Infrastructure Management: Databricks manages the underlying infrastructure, including provisioning and scaling, allowing you to focus on data analysis and application development without worrying about infrastructure management.

- Collaboration and Notebooks: Databricks offers collaborative features that enable teams to work together effectively. It provides interactive notebooks for code development, data exploration, and documentation, facilitating collaboration and knowledge sharing.

- Machine Learning Capabilities: Databricks provides built-in machine learning libraries and frameworks that facilitate developing and deploying machine learning models. It supports popular frameworks like TensorFlow and PyTorch.

- Data Visualization and Dashboards: Databricks allow you to visualize your data and create interactive dashboards using popular visualization tools. This helps in data exploration, understanding patterns, and sharing insights with stakeholders.

Comparison of Databricks Platforms

When evaluating Databricks platforms, it’s essential to think about the main attributes and functions each platform offers. This comparison aims to overview three leading platforms that provide Databricks: Amazon Web Services (AWS) EMR, Google Cloud Dataproc, and Azure HDInsight. Let’s explore the features and differences among these platforms:

Databricks Amazon Web Services Pricing

Databricks offers various instance types on AWS, each with its own specifications and associated costs. These instance types determine the computing power, memory capacity, and storage capabilities available for your Databricks environment.

Databricks on AWS follows a pay-as-you-go model based on the duration of usage. You are charged for the entire instance hours your Databricks cluster or workspace is running. The pricing is calculated by multiplying the instance hours consumed by the hourly rate for your selected instance type.

To provide a clearer understanding of the pricing, the following table presents a sample of Databricks pricing on AWS based on instance types and hourly rates:

| Instance Type | Hourly Rate ($) |

| Standard_DS3_v2 | 0.33 |

| Standard_DS4_v2 | 0.52 |

| Standard_DS5_v2 | 0.74 |

| MemoryOptimized_DS4_v2 | 0.69 |

| MemoryOptimized_DS5_v2 | 0.98 |

Databricks Pricing On Microsoft Azure

The pricing for Databricks on Azure is based on the concept of DBUs (Databricks Units). A DBU is a processing capacity unit that combines computing, memory, and networking resources. The number of DBUs required depends on the selected instance type and the duration of usage.

Databricks on Azure offers different instance types to cater to various workload requirements. Each instance type has a specific number of DBUs associated with it, which determines the pricing.

To provide a clearer understanding of the pricing, the following table presents a sample of Databricks pricing on Azure based on instance types and corresponding DBUs:

| Instance Type | DBUs |

| Standard_DS3_v2 | 4 |

| Standard_DS4_v2 | 8 |

| Standard_DS5_v2 | 16 |

| MemoryOptimized_DS4_v2 | 14 |

| MemoryOptimized_DS5_v2 | 28 |

Databricks Pricing for Google Cloud Platform

Databricks on GCP follows a pricing model based on Databricks Units (DBUs). A DBU represents the processing power required for running Databricks workloads. The number of DBUs needed depends on the chosen instance type and the duration of usage.

Databricks on GCP offer a range of instance types to accommodate different workload requirements. Each instance type has a specific number of DBUs associated with it, which determines the pricing. Instance types differ regarding compute power, memory capacity, and storage capabilities.

To provide a clearer understanding of the pricing, the following table presents a sample of Databricks pricing on GCP based on instance types and corresponding DBUs:

| Instance Type | DBUs |

| n1-standard-4 | 6 |

| n1-standard-8 | 12 |

| n1-standard-16 | 24 |

| n1-highmem-8 | 16 |

| n1-highmem-16 | 32 |

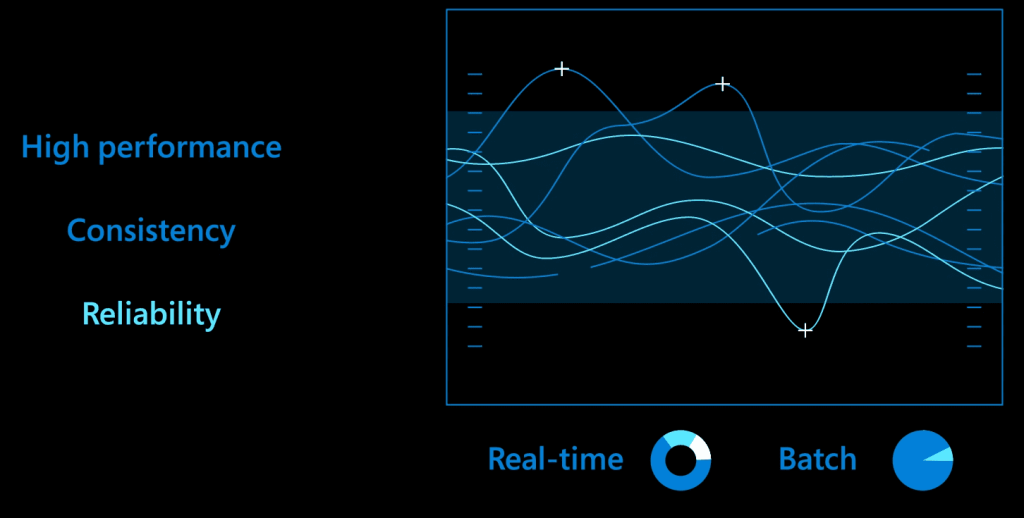

Data Analytics Clusters: Meaning

A Data analytics cluster refers to a group of interconnected computing resources specifically made to handle and evaluate enormous amounts of data. These clusters are commonly used in data analytics platforms or frameworks to perform various data processing tasks, such as data transformation, querying, and advanced analytics.

The primary purpose of a data analytics cluster is to enable high-performance and scalable data processing. By leveraging the distributed nature of the cluster, large datasets can be divided into smaller partitions, and each partition can be processed simultaneously across multiple compute nodes.

Data analytics clusters are often associated with technologies like Apache Hadoop and Apache Spark, which provide distributed computing capabilities. These clusters can be provisioned and managed in several cloud computing systems, including Google Cloud Platform (GCP), Amazon Web Services (AWS), and Microsoft Azure, or can be deployed on-premises using dedicated hardware.

Azure Databricks Consultation: An EPC Group Approach To Ensure Business Value

At EPC Group, we take a comprehensive approach to Azure Databricks consultation, focusing on delivering business value and maximizing the benefits of this powerful data analytics platform. Our approach is designed to help organizations leverage Azure Databricks effectively and make informed decisions based on their unique business requirements.

We prioritize knowledge transfer and collaboration throughout the consultation process, equipping your team with the skills and understanding needed to leverage Azure Databricks fully. With EPC Group’s approach to Azure Databricks consultation, You can make this reach the potential of an advanced analytics platform and gain actionable insights to fuel your organization’s success.

Frequently Asked Question

1. Is Azure Databricks free?

Azure Databricks offers a free trial for users to explore and evaluate the platform. But it’s important to remember that Azure Databricks is only partially yond the trial period. The pricing model, which considers factors like compute resources, storage, and data transfer, will determine how much users will be paid.

2. How is Azure Databricks cost calculated?

Azure Databrick’s cost is calculated based on several elements, such as the kind and size of the compute resources used, the amount of storage utilized, and data transfer within the Azure environment. The pricing model considers these factors and provides a breakdown of costs, allowing organizations to optimize their spending based on their specific needs and usage patterns.

3. Can we use Databricks as ETL?

Databricks can be used as an ETL (Extract, Transform, Load) tool. With its powerful data processing capabilities and built-in integration with various data sources, Databricks enables organizations to extract data from different sources, perform transformations and manipulations on the data, and load it into the desired target systems for further analysis and insights.

4. Is Azure Data Factory free?

Azure Data Factory is not entirely free but offers a limited free tier. The free tier allows users to have up to five active pipelines, with a monthly data movement quota of 1,500 pipeline runs. Beyond the free tier, costs are incurred based on data volume, activities, and integration scenarios.